![[Joe Clark: Accessibility | Design | Writing] Joe Clark: Accessibility | Design | Writing](http://joeclark.org/joeclark-angie-02IX.jpg)

![[Joe Clark: Accessibility | Design | Writing] Joe Clark: Accessibility | Design | Writing](http://joeclark.org/joeclark-angie-02IX.jpg)

Josélia Neves, from Portugal, is a researcher at the School of Arts, Roehampton University, University of Surrey. She has written a thesis, provided in partial defence of her Ph.D., with the title Audiovisual Translation: Subtitling for the Deaf and Hard-of-Hearing. Upon my request, she provided me with a copy of the CD-ROM containing the thesis and many appendices.

The thesis and other documents are saved as overlarge, untagged PDFs with unnecessary security features, like the prevention of copying text from the document. This does nothing to prevent any supposed piracy of the document and mainly succeeds in annoying researchers like me who wish to critique the document. I printed and read most of the 357-page document and my comments are as follows.

I cannot emphasize enough how the use of the malapropism “subtitling for the deaf and hard-of-hearing” influences the entire perspective of the thesis. What she calls SDH is actually called captioning even if the Portuguese language cannot differentiate between captioning and subtitling and even if the British refuse to.

If you start from the vantage point that captioning is really subtitling, except that it isn’t really subtitling, it’s subtitling for the deaf and hard-of-hearing, then you immediately start out with a patronizing attitude based on translation theory. Captioning is not translation, and, while “audiovisual translation” as a field of study may occasionally intersect with captioning, it is a fatal mistake to act as though the following statements are true:

We have been doing captioning on a regular basis for 30 years. It is not up to researchers in audiovisual translation to redefine what we’re doing as subtitling.

I would certainly agree that translation theory is applicable to captioning in the cases where foreign-language films are captioned and not subtitled (quite a common occurrence now on NTSC DVDs). I am not willing to go along with the fiction that captioning is really a particular, probably inferior, kind of subtitling. It is wrong from first principles.

Neves simply dismisses “captioning” as “the American term.” In fact, the U.K. and Ireland are unique among English-speaking countries in calling captioning subtitling. Canada, the U.S., Australia, and New Zealand call captioning captioning. In short, if you prefer to call captioning subtitling, you’re outnumbered.

As I have explained ad nauseam already, this is not a case of using multiple terms for the same thing (boot/trunk or lorry/truck). It is a case of using the same term for two different things (subtitling/subtitling and subtitling/captioning). “Most of the time,” Neves writes, “there is a need to contextualize the use of the term to grasp its intended meaning.” Not if you use the right term. Captioning can only mean captioning.

Things become even more perverse when an attempt is made to discuss closed vs. open captioning. Neves seems to prefer the locution “closed captions or teletext subtitling,” which is not only wordy but simultaneously too precise and not precise enough. Teletext captions are closed captions. Closed captions don’t have to be teletext (Cf. the Line 21 system). As an example of how Neves gets this wrong, she writes “With the emerging digital age, closed captioning and teletext subtitling may soon become obsolete.” How? Digital TV in the U.K. and North America, among other places, still provides closed captioning. (In fact, Line 21 and teletext are still supported, although those signals are translated to digital broadcasting.) Should anyone be keen on the malapropism “closed subtitling,” be aware that it refers to written translations you have to turn on or opt into and isn’t captioning in any form.

Josélia Neves’s entire thesis concerns captioning. She may apply subtitling principles and theory to that discussion. But, perhaps unbeknownst to her, and irrespective of whatever howling objections she and the British may raise, what she’s been doing all along is studying and writing about captioning.

I recognize this may come as a shock. The truth often does.

The people Neves consistently calls “hearers” are actually “hearing people” in English.

Neves points out that “interlingual SDH” – that is, captions in a language separate from the audio – “is becoming a growing feature on DVD releases, notably from English into German and Italian.”

Neves notes a “misconception in relation to subtitling for the Deaf and HoH that SDH is always intralingual,” that is, takes place within the same language as the audio.

[V]ery soon, it may be possible for viewers to choose the type of subtitles they want to view.... This may mean a change in font, colour or position on screen, but it may also mean being able to choose graded subtitles to preferred reading speeds.

HDTV captions already let you choose font and colour. I don’t know of any system that permits a change of position, except for oddball offscreen displays. Dual captioning channels at different reading speeds have in fact been attempted, as with the Arthur example, a final report on which is long overdue.

The author correctly points out – and I’m glad somebody else has – that “[v]ery few research projects get to be known by the professionals who actually do the subtitling, a fact that hinders progress towards the improvement of quality standards.”

Neves’s bibliography is as lengthy as you’d expect in a Ph.D. thesis and manages to cite a few papers here or there about actual captioning. I would say she is missing most of the existing published research on captioning. By starting out with the misapprehension that her topic was subtitling, she naturally ended up focussing on subtitling papers, even subtitling papers that pretend to be talking about captioning but are actually concerned with a debased or special form of subtitling.

I am not sure why more research on captioning was not included; surely it would not have been difficult to do a literature search on that term, or else why were the handful of papers on captioning actually included?

Neves’s basic unfamiliarity with captioning and her ideology as a subtitler betrays itself in her discussion of one of the features that differentiate captioning and subtitling – the provision of non-speech information like sound effects and speaker identification.

Most SDH solutions [sic] today... add supplementary information to fill in some important aspects of the message that may derive from the non-verbal acoustic coding systems.

(Did you understand that?)

In some cases, this is done through an exercise of transcoding, transferring nonverbal messages into verbal codes. This practice often results in an overload of the visual component. It alters the value of each constituent and, above all, adds enormous strain to the reading effort, for much of what hearers perceive through sound needs to be made explicit in writing. The extra effort that has to be put into reading subtitles often bears on the overall reading of the audiovisual text and can diminish the enjoyment of watching it for some viewers, a fact which is equally valid for SDH and common subtitling [sic].

These passages amount to an unvarnished claim that adding something as simple as [Phone ringing] to a transcript of “verbal messages” will overload some viewers. Neves cites no research at all to back this up, and didn’t conduct any such research herself. While this is clearly her opinion and she’s welcome to it, her opinion has no basis. I think it’s pretty simple: If we were really overloading deaf viewers with non-speech information, then we’d just have been using same-language subtitling (all-bottom-centre positioning, editing down to two lines only, no NSI at all) over the past three decades.

Non-speech information is presented only occasionally in captioning because it only occasionally comes up (and in some genres, like TV commercials, it almost never does). Out of 1,500 captions in a two-hour movie, maybe 20 to 50 of them might be NSI in some sense, including explicit speaker IDs. This would be pretty easy for a researcher to check; in fact, you could do it while sitting at home watching TV. Maybe the number is ten or 100, but it won’t be 500 or 1,000. NSI is uncommon in captioning even if it is essential.

If deaf people couldn’t keep up with captions due to the addition of occasional NSI, we would have heard complaints already. I find it interesting that Neves later goes on to advocate the use of smileys in captioning, all or nearly all of which have to be explained up front, while implicitly claiming that a couple of words in plain English will bring the reading of captions to a screeching halt ([captions screech]).

I think Neves has the issue of caption reading speed quite wrong, and this too stems from looking at the problem as one of subtitling. Subtitlers inhabit a curious parallel universe where up is down, in is out, red is green, and nobody at all can read more than two lines of text running at a speed beyond 130 words a minute. In this universe, no deaf people or other captioning viewers, who have been ticking away at 200 words a minute and faster for an entire generation, can actually be found.

One of the aspects that is highly conventionalized

– that is, accepted without experimental proof –

and that is considered to be fundamental to the achievement of readable subtitles is reading speed. It is a fact that, in general, cinema and VHS/DVD subtitles achieve higher reading rates than subtitles on television programs. This is justified by the fact that cinemagoers and DVD users are usually younger and more literate in the reading of audiovisual text [quotes various subtitlers as references].

Oh, dear. Wait till Neves learns that this is, in fact, false. Captions on North American TV, cinema, and “VHS/DVD” have always shared the same speed. Over the years, that shared speed has increased as captioners finally figured out that they aren’t subtitlers and that their job is not to adopt a condescending attitude and reduce the source dialogue to a couple of lines.

If Neves is talking about captioning for kids, then the correct method is to provide verbatim captions on one channel and edited captions on another. The method more typically encountered in the U.K. and Ireland and Europe is to simply treat every viewer as a quasi-illiterate and edit caption speed down to next to nothing, even after such habits were contradicted by research (PDF).

The author goes on quite a tear (pp. 137–139) quoting subtitling researchers who enthusiastically verify their own chauvinisms and assumptions about reading speed, all of which boil down to a patronizing belief that subtitlers have to dumb down subtitles so the unwashed can actually read them. Neves supports a subtitling (sic) speed of 150 to 180 words a minute across the board as a maximum, and quotes some other subtitling researchers as claiming, apropos of nothing, that deaf people are worse readers, so captions should be even slower than that.

She completely ignores research by Jensema documenting that captioning viewers read upwards of 200 wpm quite comfortably. In fact, Neves places children and deaf people in the same category of slow readers (“findings... of the longer time taken by children to read subtitles, and the often-mentioned fact that deaf adults tend to have the reading ability of a nine-year-old hearing child”).

In effect, Neves quotes research from one field to back up assertions she misapplies to another field. Additionally, deaf adults are not children and Neves cannot prove they have the reading ability of children.

And we’re not done yet. Neves manages to quote Michael Erard’s profile of me (while never, ever mentioning me elsewhere in her thesis) as Erard explains the evolution of captions from a very slow reading speed to near-verbatim. “It is still questionable if viewers in general can keep up with the speechrates that are used in many live broadcasts of news events,” she writes.

First of all, I would appreciate it if Neves would concern herself with either “some” viewers or “viewers in general,” but not both, when discussing caption speed. I know of no research on eyetracking or comprehension of captioning viewers watching real-time captioning, but given that they can read pop-on captions at 200 wpm and above, I doubt that reading real-time captions at such speeds is “questionable.” Even if it is, how does Neves suggest that real-time captioners edit utterances that are delivered live and haven’t even finished being uttered yet?

She then quotes a 1996 U.K. report essentially stating that, while viewers claim to want verbatim captions, they really can’t keep up with them. The research documented a near-even split of preferences between verbatim and edited; not only is that not a ringing endorsement of edited captions, there are no pairs of similar shows with edited vs. verbatim real-time captions that one could actually test. In any event, the findings were contradicted by later research (PDF).

Neves cites her own research showing that Portuguese deaf subjects could “had difficulty following subtitles at a rate of 180 wpm that had been prerecorded and devised with special care to ensure greater readability.... Matters got worse when these viewers read subtitles that had not been devised with special parameters in mind.” While this research is interesting, it exists in the context of Portugal, where captioning is new. Neves does not explain how much experience the deaf viewers had with captioning, nor does she investigate whether reading speeds increased with experience. (That was the case in Jensema’s research even with caption-naïve hearing people and even over very short periods of watching captioning.)

Neves thinks that research such as this makes verbatim captioning “questionable,” but admits the industry trend is toward verbatim captioning. She concedes that deaf people and hard-of-hearing people may have different needs, particularly due to their different educations, but simply states that “[b]y providing a set of subtitles for all, we risk the chance of not catering for the needs of any.” Her solution, of course, is to edit absolutely everything, which can be described as destroying the integrity of all programming for the unproven needs of an undetermined few. If reading speed is really an issue, then give us multiple streams of captions.

“[H]earing-impaired people” – the choice of that term is dubious based on what she’s about to write – “seldom acknowledge that they cannot keep up with the reading speeds imposed by some subtitles.... One only becomes aware of the amount of information that is left out when objective questioning takes place and it becomes obvious that important information was not adequately received.” A research citation would be useful here.

Paradoxically, Neves does not view “editing” as the answer to the problem of caption speed. Simply leaving words out “may result in the loading of the reading effort.” This seems plainly nonsensical on its face, especially as she defines editing (“the clipping of redundant features and the reduction of affordable information”), but that’s what she believes. She would apparently prefer “the use of simpler structures or better-known vocabulary” (p. 148). Yet, one page later, she states that it is untrue that deaf people “cannot cope with complex vocabulary. Actually, subtitles may be addressed as a useful means to improve the reading skills of deaf viewers, as well as an opportunity to enrich both their active and passive vocabular[ies].”

Neves appears to want Portuguese captioners to rewrite Brazilian Portuguese into national Portuguese even though, by her admission (p. 303), that simply did not work very well and resulted in captions that were hard to understand. Well, here’s a question: Are not hearing viewers inconvenienced exactly the same way? Isn’t it almost as taxing for a national-Portuguese speaker to understand spoken Brazilian Portuguese as it is to understand Brazilian Portuguese in written form?

And here we have further reiteration of standard subtitler shibboleths about “subtitle” length. Neves discards out of hand the idea of a three-line caption. Tell me: What if the speaker is at screen left and the lines are left-justified and short? Is it still wrong?

Neves pays as much attention to typography as most captioners do, i.e., next to none. Here are my public questions for her: If typography is the instrument of captioning, why does it merit only a paragraph or two in your thesis? Why are you so concerned with caption-editing instead of actual legibility and readability of captions?

She notes (p. 188) that teletext decoders do not always carry a full Portuguese character set, listing çãê as missing characters (and presumably also ÇÃÊ, tellingly overlooked).

[Other viewers] complained that the letters were compressed and difficult to decipher. The ç and g letters, for instance, were often a problem. These instances were sufficient to make us aware of the way fonts alone may condition reception.

Neves also notes the confusability of ! and l in a real-world example. There is some gobbledygook from two competing “standards” documents about whether or not to type a space before exclamation point and question mark. These wouldn’t be full spaces in print typography, and rounding them up to full spaces in monospaced caption fonts is a mistake. But that’s what Neves and her team decided to do anyway “after testing.”

Translators working on interlingual subtitling (for hearers)

That’s the only kind of subtitling there is.

reacted to the introduction of such spaces [reacted how?], but agreed to them once they became aware of the reasons why they had been used.

I note with approval that any impetus toward all-capitals captioning was scotched right off the bat: “Deaf people in Portugal reacted badly to subtitles using upper case and considered that they were more difficult to read.” They are.

Colour captions were shown not to work as a reliable identifier of speaker – “a cause for confusion rather than an asset.” It was later decided “that colours would not be used to identify speakers but to differentiate labels with explanatory notes” – it’s called non-speech information – “from subtitles containing actual speech.” But Neves goes on to contradict this advice for simple interview and chat shows.

Neves states that the Spanish “standard” for colour assignment is nonsensical in parts and contradicts the British “standard.”

Neves believes that colour captions are another practice that “loads the decoding effort” and makes captions harder to read. She also points out the obvious – that, in some productions, there are more characters than colours. I would have appreciated some research to back up her claims here, and some acknowledgement that nearly all U.S. and Canadian captioning has been monochrome forever.

This part of Neves’s thesis left me agog. She seriously proposes using smileys to convey emotion in captions. (She does the standard intellectual thing and calls them “emoticons,” a term that is only ever used as a euphemism for “smiley.”) Neves is apparently something of a fan of smileys, using them as actual data in a table on p. 307, in which smiling, neutral, or frowning faces are used to represent viewer responses to different problems in captioning.

Neves has gone to some length to discuss the issues involved in verbatim captioning (she’s not really in favour of it) and even mentions how typography can hinder understanding, yet she’s a staunch proponent of adding strings of ASCII punctuation to captioning as some way of “helping” the viewer.

Two out of five smileys initially tested were misread by deaf subjects. She even admits the futility of all-punctuation smileys: “Even though it might be more difficult to read :-( than to see the meaning of ☺ or [another graphical smiley], the first allows for greater interpretative freedom for the latter.” In other words, punctuation is the better thing to use to accurately communicate emotion because it can be read several ways. You should use punctuation to communicate clearly because it is ambiguous and open to interpretation.

And by the way: :-( is a sad face but ☺ is a smiling face. Neves cannot even get the smileys right in her own thesis.

The Portuguese settled on nine smileys (could you jot down nine that you know right now?), but they had to be tweaked after testing, which goes to show that they are unreliable. The use of smileys was “non-disruptive and was welcomed by many.” Well, that’s irrelevant. What proportion of people understood them correctly all the time? (Less than 100%? Then why are we using them?)

The whole issue is poorly handled by Neves, who later notes: “The use of emoticons did not exclude other ways of visualizing paralinguistic information, such as expressive punctuation.” Well, if you think you have exactly two choices at hand – smileys from a teenage girl’s text messages or a row of exclamation points – well, yes, of course the smileys look more attractive. What I want to know, though, is why Neves never really discusses (from what I can tell, she never discusses at all) the idea of writing things out in words. You know, like writing out manner of speech: [Sarcastically] or (doubtfully) or [Under her breath]. I suppose that might be too many words for the deaf person to read. It might involve more than two lines of text on a screen, a definite no-no.

But it works perfectly well in captioning in the U.S., Canada, Australia, and the U.K., all of which I’ve seen personally. What evidence does Neves have that Portuguese captioning viewers have different brains from those of captioning viewers in the four countries I mentioned? What is so unique about the Portuguese language that simply writing out manner of speech in words cannot be done?

In a full section on icons (pp. 250–251), Neves at last recognizes that “a dog can bark, whimper, snarl and yelp, for instance, [and] any hyperonomic icon will always fall short of the implied meanings of these different sounds.” Having proven for herself the fallacy of icons as a method of communication, she spends the rest of the paragraph explaining that icons must be tested and that deaf people will just have to get used to them.

Neves notes (p. 237) the fact that running multiple speakers on the same lines in the same caption block, as is the norm in the U.K., is hopelessly confusing at times. (Our dear British friends haven’t even figured out how to type a carriage return to differentiate speakers.) Neves does not discuss the ambiguities that colour captions create in clarifying who is speaking as opposed to making it obvious that a new person is speaking. Neves specifically mentions this problem later (p. 236) when discussing the use of figure dashes, not that she calls them that, to introduce the words of new speakers.

Nonetheless, Neves is hopelessly wedded to the idea of using punctuation to indicate what the use of plain words would actually state. Instead of proposing that a caption like (Phone rings) be given when a phone rings or that MARIA (on phone): be listed when that’s where Maria’s voice is heard, we get a whole runaround about adding quotation marks or using some kind of icon for a phone. They tried that with early Canadian Telidon captioning. It didn’t work then and it won’t work now.

Tell me: What kind of phone just rang? (The phone on the desk, the phone in Maria’s purse, the payphone in the booth?) Is it still ringing or did it just ring once? Is the phone at the other end of the line ringing? How do you tell any of that from an icon? You can’t. But you can write it out. Neves should realize this, as her §4.3.6.2, “Sound effects,” discusses the linguistic issues involved in writing out non-speech information.

Neves contradicts herself another way shortly when she proposes that captioners be as specific and descriptive as possible in captioning music. In a telenovela, for example, the “option that was chosen was to provide exact information about the musical pieces” being played. “Many hearers have reported that they turn on the teletext because they enjoy complementing their viewing with the extra information.” Writing things out works fine in annotating music for deaf and for hearing viewers. Why won’t it work anywhere else? Why do we have to use smileys?

“Even though the National Captioning Institute did try to introduce line 21 captioning in Europe”: It became Line 22 captioning (the L is always capitalized) and was available on home video only. Hence the claim that “their efforts were not very successful due to the strong position held by the British teletext system” is false. Teletext captions cannot be stored on VHS tapes (noted on p. 114); Line 22 captions can (not noted anywhere). Teletext was used on TV; Line 22 wasn’t. The two systems were mutually exclusive.

“The first TV program to be aired with captioning for the deaf” – surely we mean SDH? – “was an episode of The French Clef” – no, The French Chef – “to be followed by an episode of Mod Squad.” I have read here and there of a captioned Mod Squad episode, but I do not know it actually happened. (I’d love to watch it, though. Wouldn’t you?)

Neves’s description of the technical limitations of Line 21 (p. 109) is not totally accurate anymore, particularly on the issue of lack of descenders and how it has “standardized closed captions in the USA to be basically written in upper case.” On p. 111, there is an implication that teletext can carry more data than Line 21 can, which is surely true if you’re talking about teletext as a whole. Line 21 captioning has two full channels of captioning available, either of which can be divided in half if need be. On teletext, you get one page of captions and that’s it.

Neves gets cinema captioning somewhat wrong. “Rear window systems may be found in some cinemas in the USA and in Europe”: About 200 cinemas in the U.S. (still a drop in the bucket, but not “some”) and a dozen or so in Canada all use the WGBH Rear Window® system. No cinemas in Europe do; they all use open captioning. (Try explaining any of the foregoing using variants of the word “subtitling.”) She also gets the true nature of Rear Window quite wrong: “[I]nstead of having special showings with open SDH” – for God’s sake, it’s open captioning – “deaf people may request the service to be activated at any cinema where the system is available.” No, it’s always on.

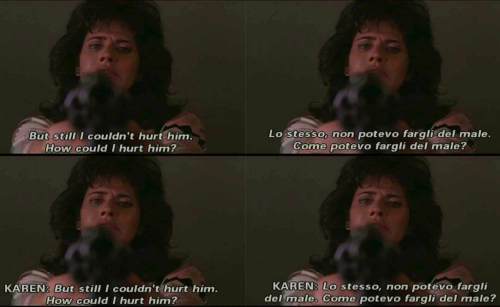

Neves provides a delightful set of screenshots of captions and subtitles (some of the former also crossing a language barrier) from a couple of movies, chiefly Goodfellas. Left to right, top to bottom: English and Italian subtitles, then English and Italian captions (the latter adding the speaker ID KAREN:).